How AI Can Help Eliminate Bias in Hiring Processes

Imagine losing out on a talented candidate just because of unconscious bias. It happens more often than you think. Studies show that identical resumes with only the gender changed often result in women being rated as less competent than men. This kind of bias doesn’t just hurt individuals—it holds back businesses too. Diverse teams are 70% more likely to capture new markets, yet many hiring managers still rely on gut feelings instead of fair, data-driven methods.

This is where AI steps in. By automating processes like resume screening and using fairness-aware algorithms, AI & Diversity Hiring: How Tech Can Reduce Bias? becomes more than just a concept—it’s a game-changer. With the right tools, you can create a hiring process that’s not only inclusive but also boosts innovation and retention.

Key Takeaways

AI helps cut bias by sorting resumes based on skills only.

Using the same skill tests for everyone makes hiring fair.

Checking AI systems often keeps them fair and free of bias.

Different team members bring new ideas and help businesses grow.

Clear AI decisions build trust and match company values.

Understanding Hiring Bias

What Is Hiring Bias?

Hiring bias happens when decisions about candidates are influenced by stereotypes or personal preferences instead of their actual qualifications. It can be intentional or unconscious, but either way, it creates an uneven playing field. For example, recruiters might favor candidates who share similar backgrounds or interests, even if others are more qualified. This bias often stems from ingrained societal norms and can lead to unfair hiring practices.

Did you know? Unconscious bias can cause recruiters to overlook highly qualified candidates simply because they don’t fit a stereotype. This perpetuates inequality and limits opportunities for underrepresented groups.

Common Types of Bias

Gender Bias

Gender bias occurs when assumptions about a candidate’s abilities are based on their gender. For instance, women are often perceived as less competent in leadership roles. A study by the National Bureau of Economic Research found that blind recruitment processes significantly increased the chances of women being shortlisted for interviews. This shows how removing gender identifiers can help level the playing field.

Racial and Ethnic Bias

Racial and ethnic bias happens when candidates are judged based on their race or cultural background. Those who don’t fit the perceived “norm” may face discrimination, even if they’re highly qualified. This bias can prevent organizations from building diverse teams, which are proven to drive innovation and success.

Unconscious Bias

Unconscious bias is trickier to spot because it operates below the surface. It’s when recruiters unknowingly favor candidates who remind them of themselves or fit certain stereotypes. A survey of 234 HR employees revealed that many believed their colleagues were more biased than they were, highlighting the challenge of recognizing one’s own biases.

The Impact of Bias on Organizations

Reduced Diversity and Innovation

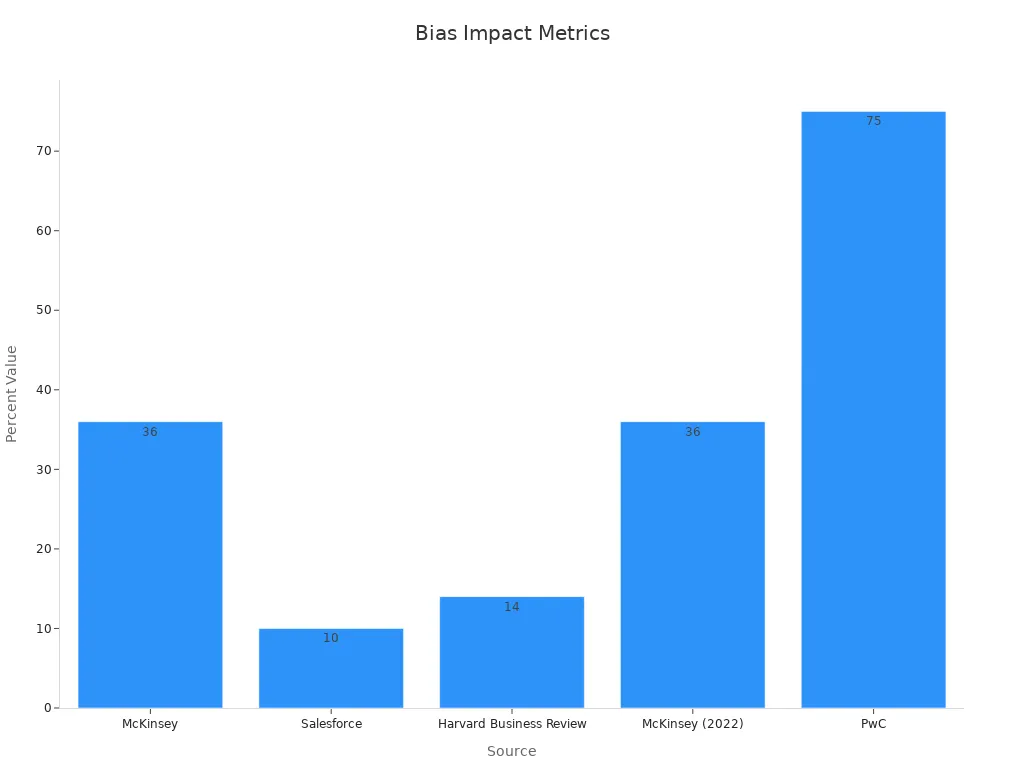

Bias in hiring directly impacts diversity and innovation. According to McKinsey, companies in the top quartile for ethnic diversity are 36% more likely to outperform their peers financially. Diverse teams bring fresh perspectives, which fuel creativity and problem-solving.

Legal and Reputational Risks

Ignoring bias can also lead to legal troubles and damage your company’s reputation. Discrimination lawsuits are costly and can tarnish your brand. On the flip side, fostering inclusivity boosts employee engagement and strengthens your employer brand. For example, Salesforce saw a 10% increase in employee engagement after focusing on inclusivity.

Challenges of Traditional Hiring Methods

Subjectivity in Resume Screening

When you think about resume screening, it might seem straightforward. But did you know recruiters spend only 6-8 seconds on each resume? This quick glance can lead to inconsistencies. You might miss out on great candidates just because their resume didn't catch your eye in those few seconds. The manual process is not only time-consuming but also prone to delays. This means you could lose out on top talent who get snapped up by competitors.

Recruiters often rely on gut feelings, which can vary from one person to another.

Subjectivity can cause variations in candidate selection, impacting the fairness of the process.

Influence of Unconscious Bias in Interviews

Interviews are another area where bias sneaks in. You might not even realize it, but unconscious bias can shape your decisions. This bias often leads to favoring candidates who remind you of yourself. It can reduce workplace diversity, stifling creativity and innovation. McKinsey's research shows that diverse companies tend to outperform their peers. So, when bias affects your hiring, it impacts your bottom line too.

Unconscious bias can disadvantage candidates from underrepresented groups.

Blind recruitment processes have shown to increase chances for women and minorities.

Overreliance on Personal Networks

Relying too much on personal networks can limit your talent pool. You might think it's a safe bet to hire someone recommended by a friend. But this approach often reinforces existing stereotypes and biases. While 78% of organizations use data-driven hiring, up to 60% of these algorithms can replicate human biases. This means you're not really expanding your reach or diversifying your team.

Tip: Consider broadening your search beyond personal networks to discover fresh talent and perspectives.

By understanding these challenges, you can start to see why traditional methods might not be the best fit for today's diverse workforce. Embracing new technologies and approaches can help you build a more inclusive and innovative team.

AI & Diversity Hiring: How Tech Can Reduce Bias?

Objective Resume Screening

Analyzing Skills and Qualifications Without Personal Identifiers

AI can transform resume screening by focusing on skills and qualifications instead of personal details. Traditional methods often allow unconscious bias to creep in, but AI tools anonymize resumes by removing names, photos, and other identifiers. This ensures that every candidate gets a fair shot based on their abilities.

For example, companies like Unilever have used AI to anonymize candidate profiles and even analyze job descriptions for biased language. The results? A 50% reduction in recruitment costs and a 27% increase in female representation in management roles. This shows how AI & Diversity Hiring: How Tech Can Reduce Bias? isn’t just a theory—it’s a proven strategy.

However, it’s important to monitor these systems. If AI is trained on biased historical data, it can replicate those biases. Careful implementation and regular audits are key to ensuring fairness.

Standardized Skill Assessments

Using AI to Evaluate Candidates Based on Performance

AI-powered skill assessments provide a consistent way to evaluate candidates. These tools focus on performance rather than personal impressions, which helps eliminate bias. For instance, standardized assessments use the same criteria for everyone, ensuring a level playing field.

Organizations that adopt these assessments report better hiring outcomes. Workers hired through job tests tend to stay 15% longer and perform better than those selected through traditional methods. By using AI, you can make more informed decisions and create a fairer hiring process.

Standardized assessments reduce the influence of personal bias.

They help you focus on what truly matters: a candidate’s ability to do the job.

Fairness-Aware Algorithms

Designing Algorithms to Detect and Mitigate Bias

Fairness-aware algorithms are designed to identify and reduce bias in hiring. These algorithms analyze data for demographic parity, ensuring balanced acceptance rates across different groups. They also include built-in mechanisms to detect unconscious bias and adjust accordingly.

For example, a study in the Journal of Applied Psychology found that AI-based assessments reduced hiring bias by 25%. This not only creates a more equitable workplace but also improves the quality of hires. By integrating fairness-aware algorithms, you can ensure that AI & Diversity Hiring: How Tech Can Reduce Bias? becomes a reality in your organization.

Tip: Regularly audit your AI systems to ensure they meet fairness standards and adapt to changing needs.

Improved Data Collection and Analysis

Identifying Patterns of Bias in Historical Hiring Data

Have you ever wondered how much bias might be hiding in your hiring data? AI tools can help you uncover it. By analyzing historical hiring data, AI identifies patterns that might not be obvious to the human eye. For example, it can detect if certain groups are consistently overlooked during the hiring process. This insight allows you to take corrective action and create a more equitable recruitment strategy.

AI systems excel at combining small data (like individual hiring decisions) with big data (such as industry-wide trends). This combination enhances accuracy in spotting bias. Autonomous testing also plays a role. It can flag biases in datasets before they influence hiring decisions. Researchers at MIT even showed that re-sampling data can reduce bias. Their AI model learned features like gender and skin color while minimizing categorization biases. This means you can use AI to not only detect but also address bias effectively.

Tip: Regularly review your hiring data to ensure your recruitment practices stay fair and inclusive.

Ensuring Transparency in AI Decision-Making

Providing Clear Explanations for AI-Driven Decisions

When AI makes hiring decisions, transparency is key. You need to know how and why the system selects certain candidates. This builds trust with both your team and job applicants. Clear explanations also help you ensure the AI aligns with your company’s values and goals.

A recent survey found that 64% of job candidates believe AI tools are as fair or fairer than human evaluators. However, 79% of workers want to know if AI is part of the hiring process. This shows that while people trust AI, they also value transparency. HR professionals agree—67% think AI is equally or more effective than humans at identifying qualified candidates. By providing clear explanations, you can address concerns about AI’s ability to assess subjective qualities like culture fit.

Transparency doesn’t just benefit candidates. It helps you refine your AI systems. When you understand how decisions are made, you can identify areas for improvement. This ensures your AI tools support your diversity goals and create a fairer hiring process.

Note: Always communicate openly about how AI is used in your hiring process. This fosters trust and encourages more candidates to apply.

Addressing Challenges in AI-Driven Hiring

Risks of Biased Training Data

How Historical Data Can Perpetuate Bias

AI systems are only as good as the data they’re trained on. If the training data contains biases, the AI will likely replicate or even amplify them. For example, if historical hiring data favors certain demographics, the AI might continue to prioritize those groups, leaving others at a disadvantage. This can lead to unfair hiring outcomes and perpetuate existing inequalities.

Researchers have found that AI systems mirror the patterns in their training datasets. When these datasets reflect societal inequities, the AI can unintentionally reinforce them, creating biased decision-making processes.

To address this, you need to diversify your training data. Include a wide range of demographics and experiences to ensure inclusivity. Regular bias audits can also help identify and correct patterns of discrimination. By implementing fairness-aware algorithms, you can balance outcomes and create a more equitable hiring process.

Lack of Oversight and Accountability

The Importance of Regular Audits and Human Intervention

AI isn’t perfect, and without proper oversight, it can make mistakes. A lack of transparency in AI decision-making can make it hard to spot and fix biased outcomes. Over-reliance on AI without human checks can exacerbate these issues, leading to unfair hiring practices.

AI often struggles to understand context or evaluate cultural fit.

Datasets skewed toward mainstream groups can exclude underrepresented candidates.

Existing social biases in data can perpetuate discrimination.

Regular audits are essential to ensure your AI systems align with ethical hiring practices. Human intervention plays a critical role here. By reviewing AI outputs, you can catch errors and make adjustments. This collaboration ensures your hiring process remains fair and inclusive.

Balancing Automation with Human Judgment

Ensuring AI Complements, Not Replaces, Human Decision-Making

AI can streamline hiring, but it shouldn’t replace human judgment. While AI excels at analyzing data and identifying patterns, it lacks the ability to understand context or evaluate cultural fit. That’s where you come in. By combining AI insights with your expertise, you can make more balanced and ethical hiring decisions.

Human oversight ensures AI-driven decisions align with your company’s values.

HR professionals can interpret AI outputs and make the final call.

This collaboration reduces the risk of unfair outcomes and ensures decisions are contextually appropriate.

By working alongside AI, you can harness its strengths while mitigating its weaknesses. This balanced approach creates a hiring process that’s not only efficient but also fair and inclusive.

The Benefits of Reducing Bias in Hiring

Moral Imperatives

Promoting Fairness and Equal Opportunity

Reducing bias in hiring isn’t just about improving your business—it’s about doing what’s right. When you create a fair hiring process, you give everyone an equal chance to succeed. This means candidates are judged based on their skills and potential, not on stereotypes or assumptions. By removing bias, you help build a workplace that values diversity and inclusion.

Think about the impact this has on your team. Employees who see fairness in hiring feel more valued and engaged. They’re more likely to stay with your company and contribute their best work. Studies show that organizations prioritizing diversity and inclusion experience higher employee satisfaction and retention rates. Plus, fostering a culture of accountability and openness enhances your reputation as an employer of choice, attracting top talent from all backgrounds.

Tip: Regularly review your hiring data and provide ongoing training for hiring managers to maintain a bias-free environment.

Business Advantages

Enhancing Diversity and Innovation

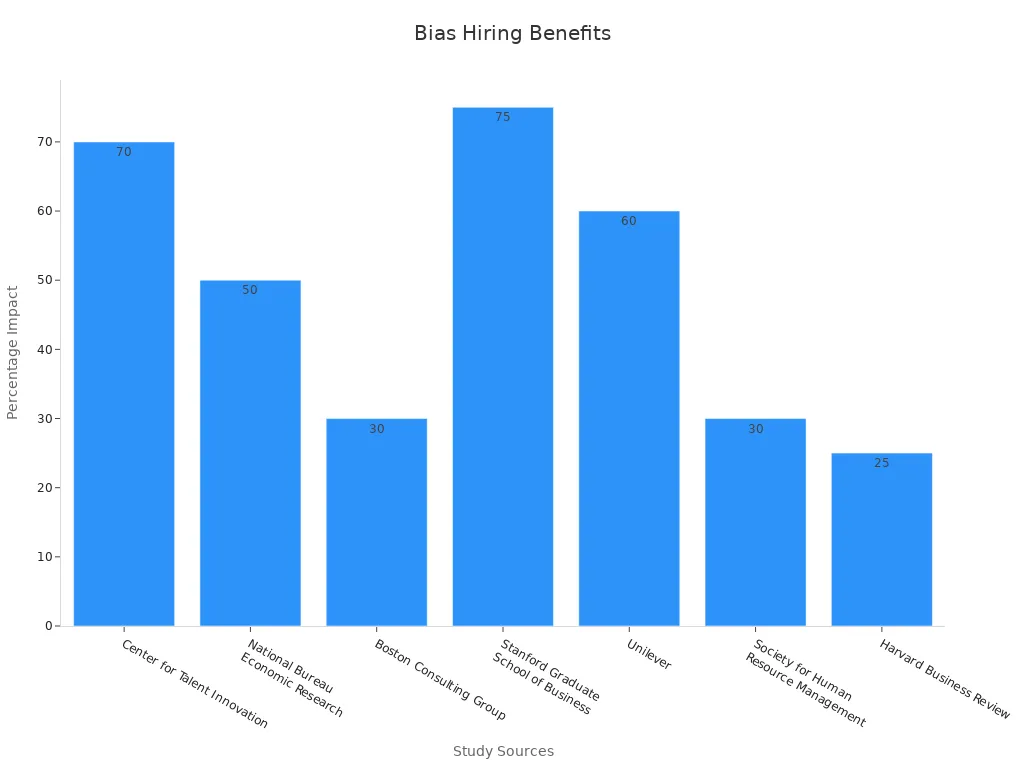

Diverse teams bring fresh ideas and perspectives, which fuel creativity and innovation. When you reduce bias, you open the door to a wider range of talent. This leads to teams that are better equipped to solve problems and adapt to changing markets. In fact, research from the Center for Talent Innovation shows that diverse teams are 70% more likely to capture new markets.

Structured hiring practices, like blind recruitment and standardized interviews, also improve candidate selection. The National Bureau of Economic Research found that these methods increase the likelihood of choosing the best candidate by up to 50%. By embracing diversity, you’re not just building a stronger team—you’re driving your business forward.

Strengthening Employer Branding and Talent Retention

When you commit to reducing bias, you send a powerful message to potential hires. It shows that you value fairness and inclusivity, which strengthens your employer brand. Candidates want to work for companies that align with their values. This commitment also boosts employee loyalty. Workers are more likely to stay with a company that actively promotes diversity and equal opportunity.

Organizations with well-trained hiring managers see 30% better retention rates, according to the Society for Human Resource Management. Harvard Business Review also reports a 25% increase in employee satisfaction when systematic evaluation methods are used. By reducing bias, you’re not just attracting great talent—you’re keeping it.

Note: A diverse and inclusive workplace isn’t just good for employees. It’s a competitive advantage that sets your business apart.

Reducing bias in hiring isn’t just the right thing to do—it’s smart for your business. AI can help you create a fairer, more inclusive process, but it works best when paired with human oversight.

85% of organizations using AI in HR report major efficiency gains.

Companies like Unilever saved 100,000 hours of interview time and cut costs by $1 million with AI tools.

Relying solely on AI can backfire. While it’s great at analyzing data, it can’t understand context or cultural fit. Combining AI with your judgment ensures ethical, balanced decisions.

Adopt AI thoughtfully, and you’ll build a stronger, more diverse team.

FAQ

What is the biggest challenge when using AI in hiring?

AI can inherit biases from the data it’s trained on. If historical hiring data is biased, the AI might replicate those patterns. Regular audits and diverse training datasets help reduce this risk.

Can AI completely eliminate bias in hiring?

Not entirely. AI reduces bias significantly, but human oversight is essential. Combining AI with human judgment ensures decisions are fair and contextually appropriate.

How do fairness-aware algorithms work?

Fairness-aware algorithms detect and adjust for bias in hiring data. They analyze demographic patterns and ensure balanced outcomes across different groups. This helps create a more equitable process.

Is AI hiring fairer than traditional methods?

Yes, AI hiring is often fairer. It focuses on skills and qualifications, removing personal identifiers that might trigger unconscious bias. However, transparency and monitoring are crucial for maintaining fairness.

How can I ensure my AI hiring tools are ethical?

Start by using diverse training data and conducting regular audits. Always communicate openly about how AI is used in your hiring process. This builds trust and ensures ethical practices.

See Also

Using AI For Fairness In The Hiring Process

Ten Strategies To Optimize Your Recruitment With ATS

How AI Recruitment Tools Are Shaping Today's Hiring Trends

Overcoming Language Challenges With Applicant Tracking Systems

Leveraging AI Tools To Forecast Candidate Performance Effectively

From recruiting candidates to onboarding new team members, MokaHR gives your company everything you need to be great at hiring.

Subscribe for more information